In high-profile discussions of deep learning on professional panels or in the media, leading experts always point to medical diagnostics as one of the most exciting and significant applications of the technology. Yet there is a disconnect—medical diagnostics using AI is getting a lot of hype but still not enough in the way of action.

AI has begun impacting people’s lives in some superficial ways: it allows people to see ads that are more relevant to them, and to play a specific song by speaking to their smart speaker. But while these services add some value to people’s lives, there are signs that the impact of AI can be much more significant. In April 2018, the FDA approved the first AI for medical diagnostics for early detection of diabetic retinopathy, which can significantly reduce vision loss if detected too late. We need more breakthrough stories like this, which means we need more data scientists to take on such challenges.

I’d like to invite more data scientists to join this pursuit: here is your formal invitation, and I’ll throw in my experience in this space as a guide. Through SVSG, I have begun collaborating with UCSF and Stanford researchers on a project with Google Cloud to offer a free diagnostic program for breast cancer in mammograms. Our aim is for this quality service to be available to women all over the world, completely free of charge.

In this post—drawing on a talk I gave at the Association for Computing Machinery—I’ll give an overview of deep learning in medical imaging, and in a follow-up post I will take a more technical dive into the methodology of such projects.

Smarter than we are

The healthcare space is starting to see some AI activity. Over 100 startups are already building solutions using AI in Healthcare according to CBInsight’s AI in Healthcare report, and tech giants like Google, IBM, Amazon, Apple are also starting to make big investments in this space.

This might seem like an impressive amount of activity, but it falls short of what the evidence tells us about the potential of deep learning in healthcare. Researchers have produced eye-popping results, and news of algorithms surpassing human performance on diagnostic tasks is becoming regular. In 2018, an algorithm written at Case Western was able to accurately identify breast cancer from tissue slides in 100% of test samples. In January, researchers at UCSF did them one better, designing an algorithm able to diagnose Alzheimer’s disease 6 years before a human radiologist. Some programs surpass human physicians not just at one task, but at several related and rare competencies that would normally be distributed over several specialists: an app called Face2Gene identifies rare genetic diseases using a face picture from an iPhone.

All of these use cases matter enormously to the patients they affect, because these diseases can all be mitigated or eradicated completely if they’re detected early. In the case of skin cancer, for example, the 5 yr survival rate for stage 1 is 92-97% vs a 15-20% survival rate when diagnosed at stage 4. And just as these solutions are suited to the problem of improving human health, they are also all well chosen applications of deep learning.

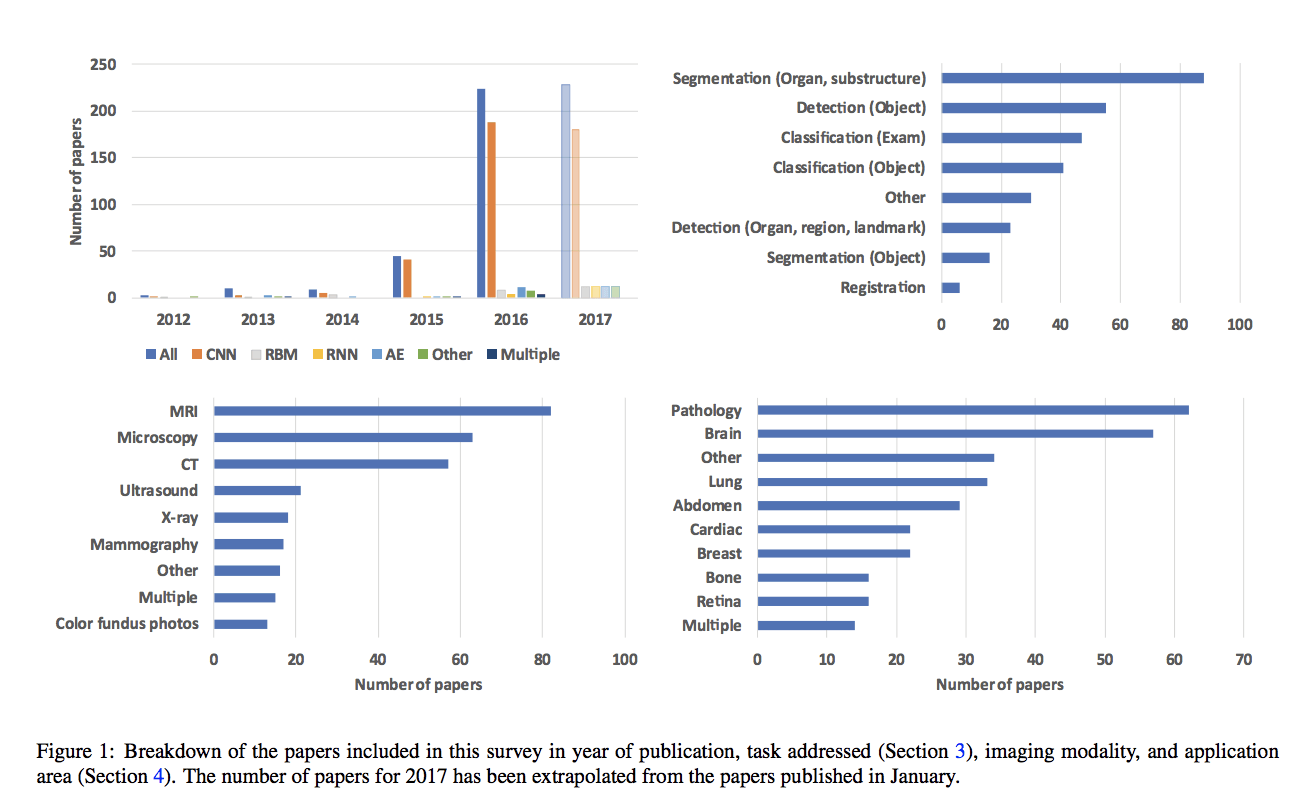

Deep learning is a branch of machine learning that uses multi-layered neural networks to learn the way humans do. Unlike other varieties of machine learning, deep learning algorithms do not require feature engineering—you just feed them well-specified training data and they learn, although they are still mostly limited to classification, segmentation, and detection tasks.

Medical imaging is a perfect application of these techniques. Ninety percent of all medical data is image data, so it is the natural place to begin exploring. Not only that—the diagnostic process is often a series of detection and classification tasks, though often they are too complex for current deep learning approaches, and properly treating patients requires deductive thinking that is still well beyond existing machine intelligence. (Rather than replacing doctors, deep learning tools complement them, providing another reliable input into the decision making process.)

The many studies exploring the application of deep learning to medical imaging generally find a powerful pairing. However, these projects have their own unique obstacles.

Data Delimited

Deep learning models are only as good as the data they are built on, which is a problem for the medical space. Training data is scarce compared to imaging problems in other fields. Labeling the data must be done by a clinician, by hand, making it time-consuming and expensive. Medical images are very large, meaning that training can require lots of server space, and the images are also not necessarily focused on the area of interest. A radiologist could photograph the entire torso with an MRI when you are only trying to train the algorithm to look at the heart.

Deep learning in medical imaging is also subject to the same limitations as all deep learning ventures. If training data are not representative of a wide range of patients, the algorithm will be ineffective at diagnosing those patients. Face2Gene could detect Down Syndrome in 80% of white Belgian children, but could correctly recognize the condition in only 36.8% of African children in the Congo. The preparation of the data can also limit the usefulness of a program. Researchers at Mount Sinai successfully trained an algorithm to detect pneumonia, but on three out of five tests, the model could not replicate its success on images collected by providers outside the system it was trained on. Luckily these obstacles seem to dissipate when the models are trained on better data: after training on a data set of African children, Face2Gene’s recognition rate among black children rose to 94%, and models built on a set of data collected from many institutions fare much better than the Mount Sinai model.

Calling all Idealists

A lot of work still remains ahead of us to make AI part of real world solution touching and impacting people’s lives positively. Whether you are experienced in deep learning or not, all the tools are available to you. The use cases are out there: the American College of Radiology released a list of the most high-value use cases for AI. Often, the data is as well: the NIH released a public dataset to developers in the hope that someone would design a program to improve lesion detection. And in my follow-up post, you can find a thorough explanation of the tools and methodology. Until then, start thinking of a disease you’d like to see disrupted.