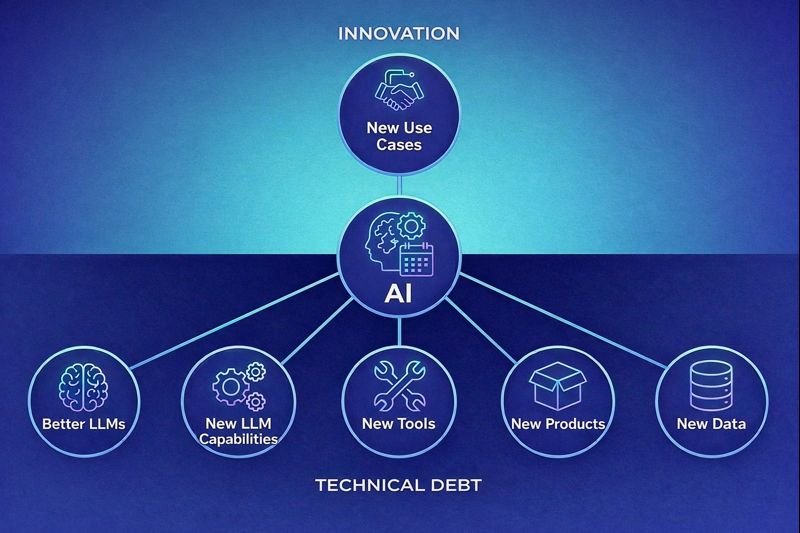

AI Is the New Technical Debt

When it comes to AI, everyone is behind.

If you haven’t deployed AI yet, you are definitely behind.

If you have deployed AI, you are behind anyway—because new use cases appear weekly, and what you shipped last month is already aging. Even the way you built it is likely no longer best practice, so you are behind again.

As one of our partners put it:

Whether the gap shows up as features you haven’t launched (but competitors have), or as features you have launched that could now be delivered faster, cheaper, and with materially better outcomes, the result is the same: AI-driven technical debt is accumulating—continuously.

Yet, it is important to understand that this is not a failure of execution, rather it is a structural property of the technology, for the time being. Those who adapt and develop technical and business strategies to take advantage of this epoch stand to reap considerable benefits.

Let’s break down what’s actually happening, and how to embrace this phenomenon.

What Is Happening?

1. The Core Reality: AI Creates Debt as Fast as It Creates Value

Since the release of ChatGPT 3.5 in November 2022, the industry has experienced something unprecedented: simultaneous acceleration across every layer of the stack.

Models are improving in quality, reasoning depth, and modality.

Tooling ecosystems are maturing and consolidating.

Infrastructure is abstracting away complexity at record speed.

User expectations are rising just as fast.

What makes AI uniquely destabilizing is that everything is evolving at once: models, data, interfaces, workflows, economics, and compliance expectations. Traditional software evolved layer by layer. AI evolves systemically.

The result is a paradox:

Every step forward creates new value—and new obsolescence at the same time.

This is why AI is not just another platform shift. It is a new form of technical debt, one that accrues even when you are doing everything “right.”

2. The Models Keep Getting Better

AI is not static software. It is a moving target.

A year ago, many teams fine-tuned domain-specific models for tasks like summarization, classification, or semantic search. Today, frontier models can handle those same tasks out of the box with higher accuracy, better reasoning, lower latency, and far less operational overhead.

A concrete example:

In 2023, building a reliable internal knowledge assistant often required:

A custom embedding model

A vector database

Prompt engineering

Fine-tuning

In 2025, the same capability can often be delivered via a single API call with better results.

That means the debt isn’t just in your code—it’s in:

The model you chose

The version you pinned

The assumptions you baked into your architecture

Every AI system begins aging the moment it ships. The LLM that powered your product in 2023 reflects a fundamentally different capability envelope than today’s models.

3. Capabilities Expand — and Yesterday’s Architectures No Longer Fit

Each model generation introduces new capabilities that invalidate prior design assumptions.

Examples we see repeatedly:

From text-only to multimodal

You built chat-first. Your users now expect voice input, image understanding, screen context, or all three simultaneously.

From prompt-response to agentic workflows

You built a Q&A bot. Today, Model Context Protocol (MCP) agents can browse, plan, call tools, and take actions across systems.

From reactive tools to proactive copilots

The expectation has shifted from “answer my question” to “anticipate my next step.”

From black-box to reasoning assistant

Models can now communicate the step-by-step process by which they constructed the results, and the reasoning that went behind it.

4. The Tooling Ecosystem Evolves

It’s not just the models. The surrounding ecosystem is evolving just as fast.

Consider Retrieval-Augmented Generation (RAG):

2022

You had to assemble everything yourself—embeddings, vector DBs, chunking, caching, retrieval logic.

2023–2024

Managed vector databases, orchestration frameworks, and evaluation tools emerged from third party vendors

Today

Major cloud and model providers offer end-to-end RAG as a service, with observability, evaluation, and governance built in.

The same pattern is repeating across:

Orchestration (LangChain, LlamaIndex, native agent APIs)

Evaluation and monitoring (TruLens, PromptLayer, OpenAI Evals)

Deployment (Assistants APIs, Tools APIs, Bedrock, Vertex AI)

Each layer you custom-built now competes with an off-the-shelf solution that is faster, cheaper, and more robust. Your custom-built stack still works—but every month you maintain it, you pay an opportunity cost.

5. Internal Innovation Is Rapidly Obsoleted by External Productization

The AI startup ecosystem is moving faster than internal teams can.

Capabilities that required weeks of internal development often emerge as polished SaaS products within months:

Prompt management

Evaluation and benchmarking

Data labeling and augmentation

Model monitoring and safety tooling

This mirrors the evolution of cloud infrastructure—but compressed into quarters instead of years. What was strategic internal innovation six months ago may already be commoditized.

6. New Use Cases Emerge Faster Than Roadmaps Can Adapt

Every new model release expands the surface area of what is economically viable.

Tasks that were previously “too unstructured” are now automatable:

Contract analysis

Customer call intelligence

Financial analysis and forecasting

Marketing content generation

Technical support triage

This forces a shift in roadmap thinking. Capabilities that were unrealistic last quarter become competitive necessities this quarter. Organizations that cannot continuously reassess what is newly possible will fall behind—even if their existing AI features are working perfectly.

7. New Data Modalities Create New Value — and New Risk

As models become multimodal, new data types suddenly matter:

Voice

Video

Screen context

Sensor and telemetry data

Voice transcription is now near-perfect. Real-time multimodal models can process spoken input, visuals, and system context simultaneously. That creates enormous value—but also new compliance, privacy, and governance challenges.

Data you are not collecting today may be the competitive moat you need tomorrow. Uncollected data is a form of strategic and technical debt.

What to Do About It

1. You Have No Choice but to Build

Waiting for AI to “stabilize” is not a strategy. By the time it does, the gap will be unbridgeable.

AI is not a product you buy; it is a competency you develop.

You cannot outsource knowing. Even if you ultimately license commercial APIs, you need internal literacy — technical, ethical, and operational — to evaluate what is possible and what is real.

If your teams do not experiment, prototype, and fail fast, we will never develop the intuition to distinguish hype from value. The cost of not building is now higher than the cost of building imperfectly. Embedded AI features age quickly — do not let them become fossilized.

You do not build AI just to deploy new features, you also build AI to learn AI.

Organizations that don’t experiment, prototype, and iterate will never develop the knowhow required to compete.

2. Manage AI Debt – and Growth – Strategically

AI-driven technical debt is unavoidable—but it can, and should be, managed, strategically.

Effective strategies include:

Plan for obsolescence

Treat every AI feature as temporary. Build explicit re-evaluation points into your roadmap.

Design for replaceability

Models, vector stores, orchestrators, and vendors should all be swappable with minimal code rewrite.

Continuously benchmark

Track accuracy and performance ruthlessly, as well as cost, latency, quality, and compliance across models and vendors

Abstract provider interfaces

Avoid hard dependencies on a single API or ecosystem.

Invest in evaluation

Automated evaluation is nascent but essential. Treat evaluation as a core competitive skill.

Prioritize learning velocity over stability

The companies that will win are not the ones who minimize risk; rather they are ones who maximize adaptation speed.

The winners will not be the companies that minimize change. They will be the ones that maximize adaptation speed.

3. Budget for Reality

AI spending is no longer an “innovation budget.” It is ongoing operational investment—like security or cloud infrastructure.

Expect three parallel budget streams:

Maintain existing capabilities

Model upgrades, refactoring, compliance updates.

Develop newly possible capabilities

Multimodal interfaces, agents, reasoning workflows.

Sustain research and evaluation

A small team focused on scanning what’s next and validating tools before the market forces your hand.

If anything, AI budgets must grow precisely because the landscape keeps moving.

The Bottom Line: Embrace Change, Enjoy the Ride!

Despite its name, AI-driven technical debt should not be thought of as a cost, but rather as an opportunity: an opportunity to deliver features and products that were inconceivable 12-24 months ago, an opportunity to amaze your customers, and an opportunity to differentiate and to win.

The companies that treat AI like traditional software—build once, optimize later—will be buried by their lack of velocity. The companies that treat AI as a living system—continuously evolving, replacing, and learning—will thrive.

AI is not just the new technical debt, it is the investment opportunity of a lifetime. Enjoy the ride!

This post will be obsolete next week.